本篇文章由 VeriMake 旧版论坛中备份出的原帖的 Markdown 源码生成

原帖标题为:用 FPGA 将来自外太空的 “噪音” 可视化|灵感来源于 Space Sound Effects (SSFX)

原帖网址为:https://verimake.com/topics/107 (旧版论坛网址,已失效)

原帖作者为:Maggie(旧版论坛 id = 107,注册于 2020-04-26 09:34:15)

原帖由作者初次发表于 2020-06-08 20:43:08,最后编辑于 2020-06-08 22:00:22(编辑时间可能不准确)

截至 2021-12-18 14:27:30 备份数据库时,原帖已获得 1882 次浏览、1 个点赞、1 条回复

SSFX是啥?

太空的声音是什么样的?这是个神秘的问题。直到我看到SSFX的网站,我才意识到我们离遥远的外太空有多近。SSFX (Space Sound Effects) 是伦敦玛丽皇后大学的一个物理和天文学项目。2017年,它组织了一场世界范围内的微电影比赛 (是的,我知道这真的是很久以前了),并将这些短片纳入了他们的 叙事电影集 中 。它由Martin Archer于2018年10月16日在YouTube上发布。

我们的灵感从何而来?

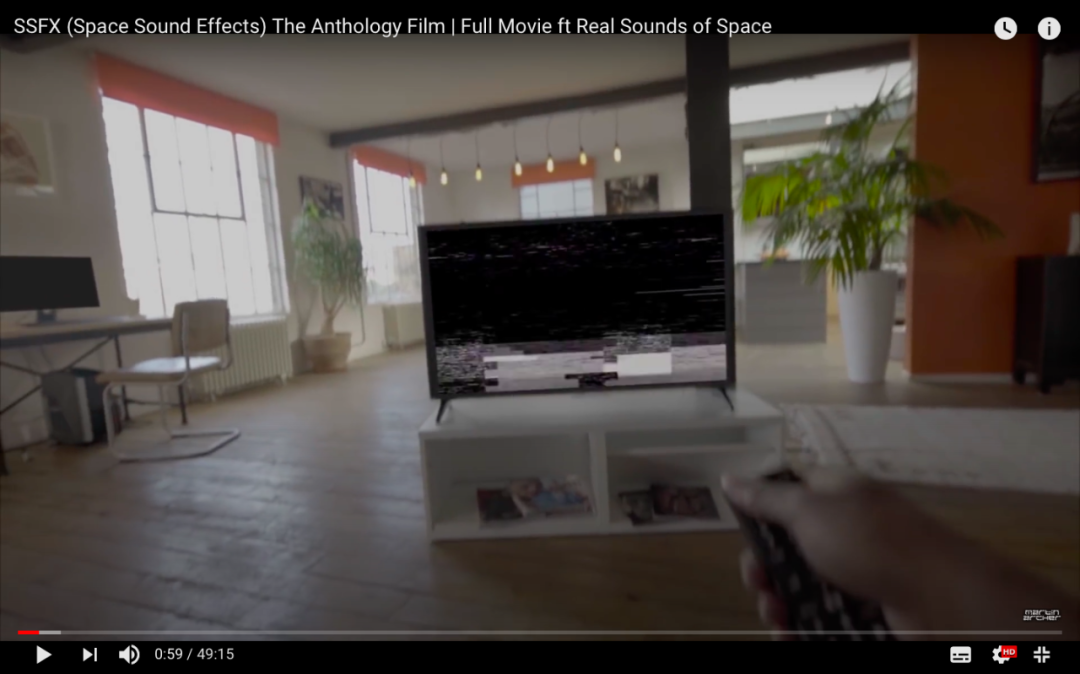

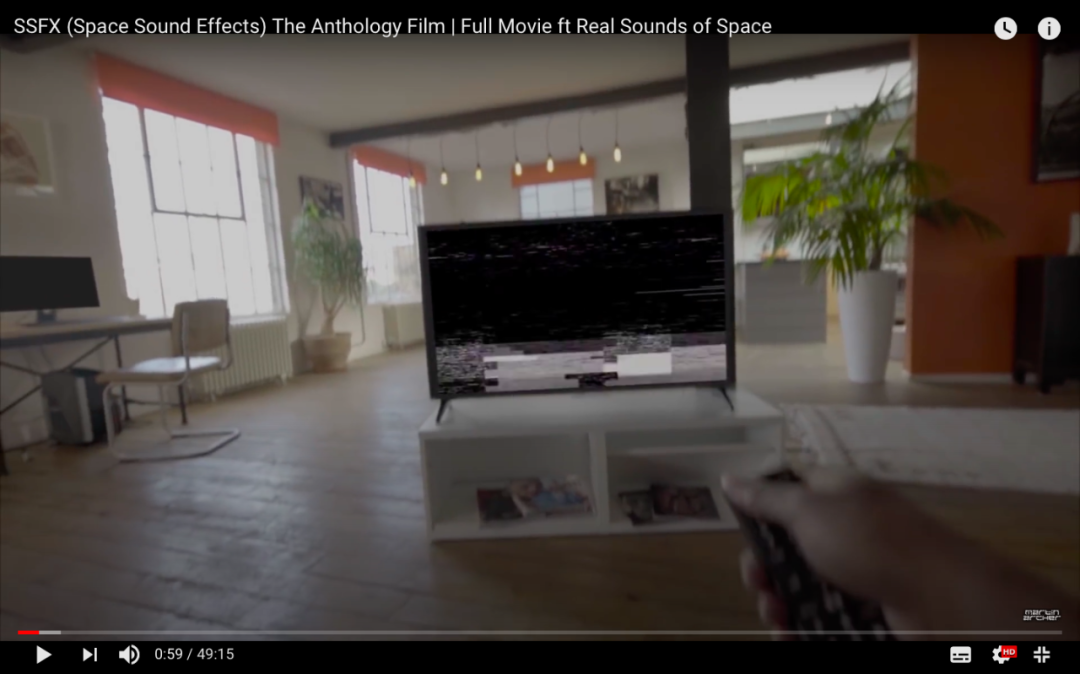

他们的整部电影,包括所有迷人的太空声音,叙事场景等,都给了我极大的震撼和启发。我当然很喜欢这些独立电影人制作的所有短片,但我最感兴趣的就是整部影片的开头和串联所有后续获奖短片的部分。

他们在拍摄中采用了第一人称视角,达到了一种引人入胜和身临其境的感觉。在后期制作中,他们还利用绿屏技术在屏幕上实现了信号中断效果。他们这样设计影片是想传达这样的一个概念:太空声音会影响和干扰地球上的科技。

截图来自YouTube上的 完整影片

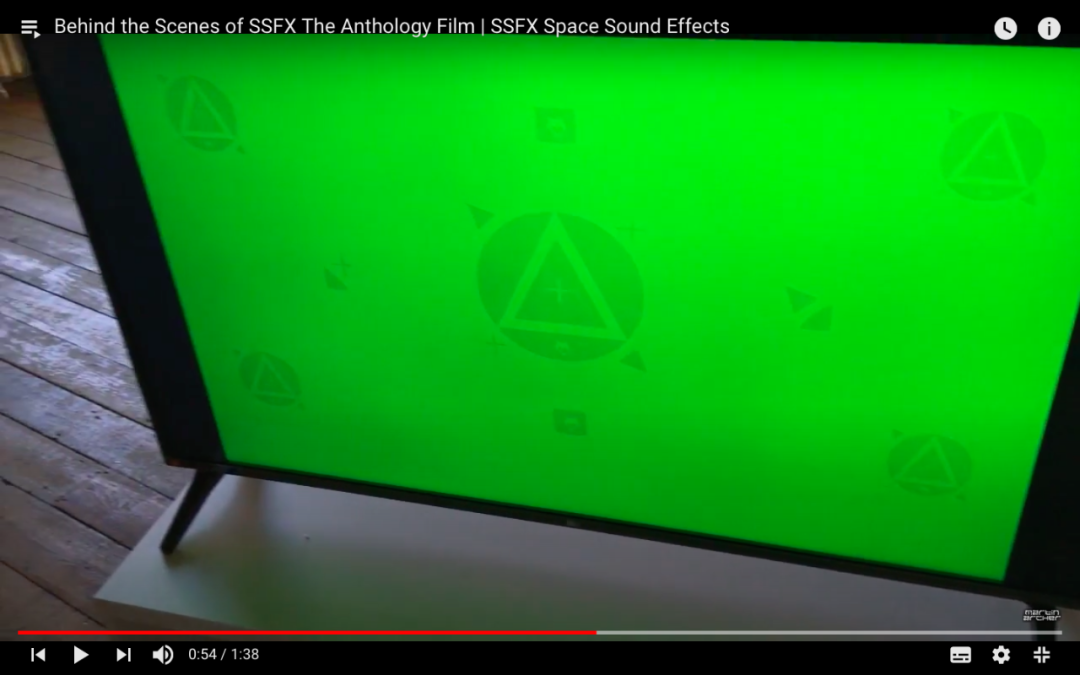

看了他们的幕后,我才知道视频里所有屏幕上的效果都是由绿幕和跟踪标记实现的。

图片来自于他们的 幕后视频

我们做了什么?

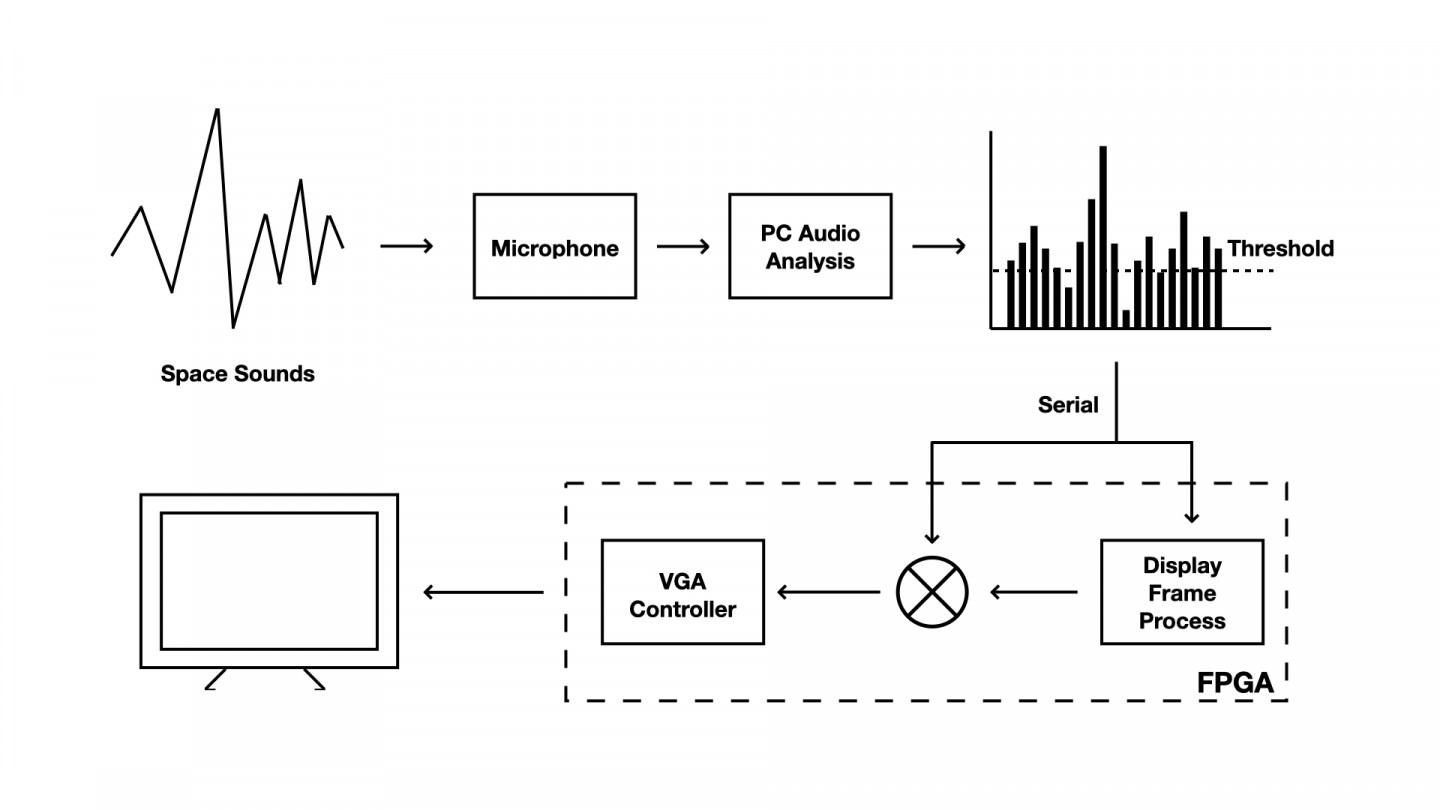

我真的很喜欢屏幕上这些很酷的信号干扰效果,特别想看看我们是否能用python代码和硬件来代替后期制作,实现相似的效果。我们讨论了这个问题,觉得可以使用FPGA试一试。为了增强系统的交互性,我们决定让屏幕与PC识别的声音强度进行实时同步。

[https://www.bilibili.com/video/BV1MK411p7Tm?spm_id_from=333.999.0.0](https://)

代码和结构

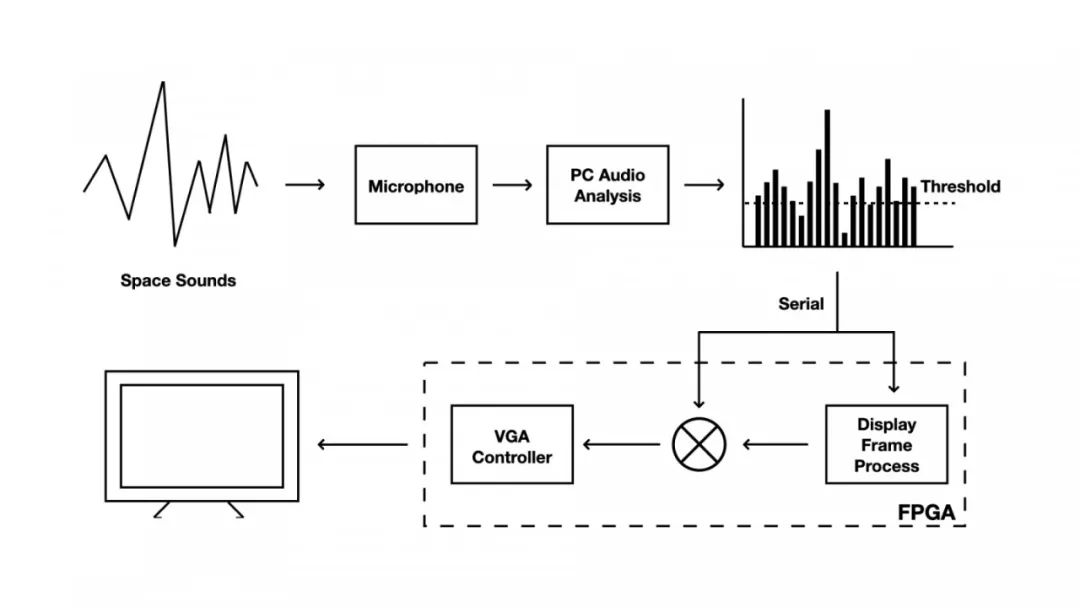

系统框图

过程

- PC通过麦克风捕捉声波后,会将信号量化,分析得到声音的振幅信息。

- 当声强达到阈值时,PC会通过串口把数据发送给FPGA,用于打开显示数据到VGA控制器的通路。此时,用于显示的帧数据就会被传输到VGA控制器,这些帧数据是根据时钟脉冲生成的随机黑白闪烁画面。

- 如果声音强度没有达到阈值,显示帧处理模块中的数据就不会被发送给VGA控制器,屏幕就会因为无信号而没有显示。

- 在我们的算法中,声强数据也会通过串口被发送到显示帧处理模块。这帮助我们实现了屏幕雪花效果与声强实时同步的效果。声音的振幅大小,会直接影响到屏幕上黑点白点的数量比例。

如果你对我们的代码感兴趣,请查看 Mengrou的GitHub页面的源代码。

What is SSFX?

What does the space sound like? It's a mysterious question. Not until I saw the website of SSFX did I realize how close we are to the remote outer space. SSFX (Space Sound Effects) is a Physics & Astronomy project at Queen Mary University of London. It organized a short-film competition around the world in 2017 (Yeah, I know it's really a long time ago) and incorporated those films into their anthology film. It was released on 16th October 2018 by Martin Archer on YouTube.

Where does our inspiration come from?

I was greatly shocked and inspired by their full film, including all the fascinating space sounds, narrative scenes, etc. Of course I love all the short-films made by thoese independent filmmakers, but I am particularly interested in how Martin and his team started the full film and connected all the subsequent short-films.

They adopted a first-person perspective in the filming to achieve a really engaging and immersive feeling. They also achieved signal interruption effects on the screens with green-screen technology in the postproduction. And their idea is that the space weather and space sound are affecting and interferencing the technology in the person's apartment.

Screenshot from the full film on YouTube.

After checking their behind the scenes footage, I got to know that all the screens in the apartment are featured with tracking markers.

Green-screen and tracking markers shown by their Behind the Scenes footage on YouTube.

What we did in our project?

I really like these cool flickering noises on screen and I wonder whether we could achieve a similar effect with python code and hardware instead of postproduction. Our crew members discussed about this and decided to use Field-programmable gate array (FPGA). And to enhance the system's interactivity, we decide to let the screen synchronize with the sound intensity recognized by PC.

This is a brief demonstration of the effect we achieved. Please check our full video [here]

[https://www.bilibili.com/video/BV1MK411p7Tm?spm_id_from=333.999.0.0](https://)

Codes and Structure

The systematic block diagram

Process

- PC will recognize sound wave with its microphone.

- It will then quantify the signal and analyze sound intensity.

- When the sound intensity reach the threshold, the switch in FPGA will be turned on through serial. The display frame data will be transmit to the VGA controller and then appear on screen as random flickering effects based on clock pulses.

- If the sound intensity does not reach the threshold, there is still data in the display frame process module, but it will not be sent to the VGA controller. Therefore there is no signal and no display on the screen.

- As the sound intensity data will also be sent to display frame process module, the algorithm will adjust the black and white ratio on screen according to the sound intensity recognized.

If you are interested in our source code, please check Mengrou’s GitHub page.

网站备案号:ICP备16046599号-1

网站备案号:ICP备16046599号-1